Solix Empower Conference Invite

Big Data, Common Data Platform, Data Management, Enterprise Applications, Solix EMPOWER No Comments »When I look back at when we started Solix, I can’t help but marvel at how much has changed. When we began this journey, ERP databases hovered around few TB, now they are in hundreds. Data security, at that time, meant protecting copies of production data for testing, training and quality control, all that was targeted was to keep the copies of production data safe, while keeping capital costs down. Now, we must not only mask test copies of data, we must also be able to predict who is trying to steal the data.

Today, we’re in a time of great change. In the last decade the amount of data in the world has increased exponentially and is now well into the zettabytes. Data is coming at industries in greater volume, velocity and variety. Enterprises are relying on these massive amounts of data for BI and looking to mine with advanced analytics. The ERP and the Enterprise Data Warehouse are no longer sufficient to deal with this onslaught of data. Hadoop has emerged as the solution to deal with this petabyte scale level data. But, even it cannot address all of the challenges, it presents to the enterprises of today and who want to ensure they are also the enterprises of the future. Data has become the most important asset an organization can have in our increasingly digital world. The world is data driven and enterprises must be as well, or risk failure.

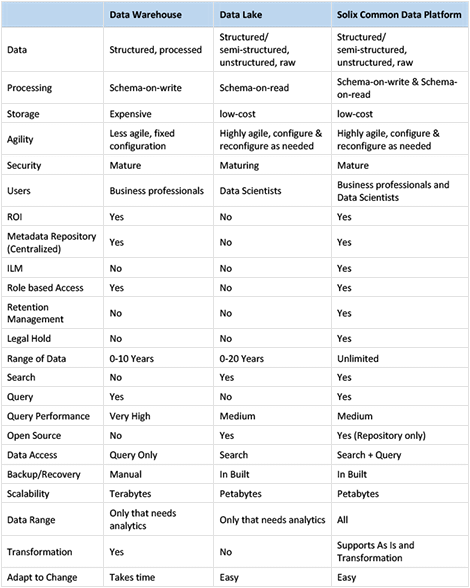

The Solix Common Data Platform brings the strengths of the Data Lake combined with Enterprise Archive with Information Governance. This can not only help in deep analytics, it can also span across the entire archive with this architecture. Fears of data swamps can be erased, and the stress of figuring out how to pay for Tier 1 storage evaporates because the CDP is built on commodity storage and commodity compute. Solix CDP makes the Data Lake truly enterprise ready for the first time and opens up vast possibilities for creating an advanced analytics platform.

At Solix, we are uniquely positioned to empower our customers to become data driven organizations. That is why we’ve announced our inaugural Solix EMPOWER conference, Sept. 18 at Northeastern University in San jose, California. Solix EMPOWER is an education and networking conference where we will bring together our own customers, analysts, industry experts and partners to discuss Analytics, Big Data and Cloud technologies. We’re thrilled with the lineup of speakers we’ve put together, which includes:

Herb Cunitz, President of Hortonworks.

Eli Collins, Chief Technologist at Cloudera.

Solomon Darwin, executive director of the UC Berkley Haas School of Business.

Jnan Dash, former Senior Executive at Oracle.

Rafiq Dossani, Economist and Educationist at RAND Corporation.

And many more, to see the full list of speakers and the developing agenda visit http://www.solixempower.com. The proceeds of every registration will also go to the Touch-A-Life Foundation, which benefits homeless high school students.

We’re excited about Solix EMPOWER, and the chance to explore together the possibilities for enterprises in our increasingly digital world. Please, register for the event at http://www.solixempower.com/registration/order/. We look forward to seeing you.