The Strategic Imperative to Evolve from Tape to Disk/Object Storage in the AI-Ready Data Era

Executive Summary

As enterprises accelerate AI adoption across research, life sciences, healthcare, financial services, manufacturing, and public-sector domains, one thing has become unmistakably clear: AI systems derive their differentiation and competitive advantage from the depth, breadth, and continuity of historical data. Decades of accumulated knowledge, scientific research, clinical evidence, EHR/EMR histories, pharmaceutical trial datasets, industry telemetry, government and public sector datasets, and academic research archives now represent high-value strategic assets, not passive records.

For years, organizations relied on tape storage to meet compliance-driven archival requirements, disaster recovery obligations, and long-horizon retention mandates. Tape fulfilled a necessary purpose when retrieval was infrequent and when archives served a narrow operational function. However, the emergence of AI has fundamentally transformed the value of historical data. What once lived in cold storage as an insurance policy must now be integrated into modern AI development lifecycles, where access speed, data quality, retrievability, and lineage integrity determine the viability of advanced models.

Tape’s constraints: slow recall times, inconsistent recoverability, operational overhead, and limited accessibility are increasingly incompatible with AI-driven enterprises. Worse, many organizations cannot even confirm whether decades of tape-archived data remain usable without resource-intensive restoration exercises. The legacy “archive-and-forget” model is no longer tenable in an era where historical datasets are directly correlated with innovation velocity, research breakthroughs, precision analytics, and AI model accuracy.

This shift requires reframing the conversation away from “Tape vs. Disk” as a hardware debate and toward a more strategic lens:

How do we architect AI-ready data pipelines that minimize friction, maximize accessibility, and ensure the long-term usability of enterprise knowledge?

How do we architect AI-ready data pipelines that minimize friction, maximize accessibility, and ensure the long-term usability of enterprise knowledge?

This is fundamentally about data governance, AI competitiveness, regulatory alignment, and enterprise resilience.

Modern AI workloads: LLMs, predictive analytics, RAG systems, domain-specific intelligence engines, and scientific model training, depend not on recent data alone but on long-tail, multi-decade datasets. These include logs, transactions, biomedical imaging, molecular data, sensor traces, environmental observations, and institutional research.

Three trends converge to increase the importance of long-memory data:

- AI amplifies the value of long-horizon datasets

These records are no longer archival, they directly shape model performance, bias mitigation, forecast accuracy, and institutional knowledge continuity. - Regulatory expectations are escalating

Emerging AI and data governance frameworks require lineage, reproducibility, transparency, and sovereignty, increasing the need to access decades of original data in auditable form. - Fourth-generation data platforms prioritize AI-native architectures

These platforms assume frequent, automated, programmatic access to datasets that historically sat dormant on tape.

Tape, in this context, yields diminishing returns: it satisfies retention obligations but constrains AI potential.

From “Tape vs. Disk” to “Cold vs. Warm AI Pipelines”: A Modern Architectural Framework

AI transforms dormant archives into active corporate assets. Long-tail historical data, once considered too cold and costly to maintain, has now become the training substrate for next-generation AI models.

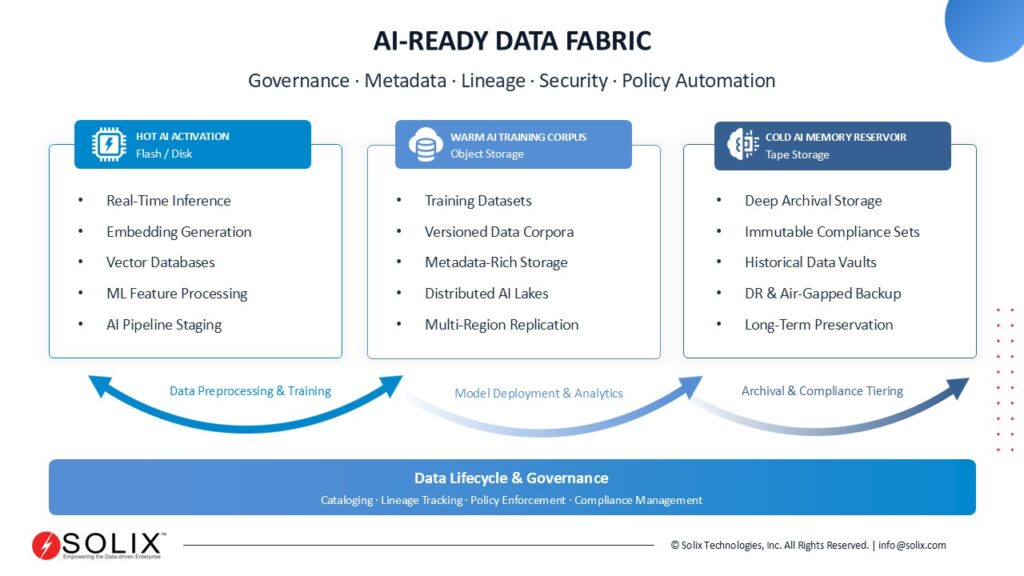

The architecture required to unlock this value is a three-tier, AI-ready data pipeline, in which tape continues to play a role but no longer as the dominant storage medium:

- Tier 1 – Hot AI Activation Layer (Flash/Disk): Optimized for high-performance ingestion, feature computation, embedding generation, vector indexing, model training, and real-time AI operations.

- Tier 2 – Warm AI Training Corpus Layer (Object Storage): Optimized for lifecycle management, dataset versioning, reproducibility, metadata-rich AI workflows, and high-throughput bulk access.

- Tier 3 – Cold AI Memory Reservoir (Tape): Used selectively for ultra-long-term, low-access retention of raw source-of-truth datasets, DR repositories, and immutable archives.

Tape no longer functions as “secondary storage” but as a specialized deep-archive layer within a broader AI-ready data fabric. Its role becomes more surgical, becoming morefocused, narrow, and value-appropriate.

Balancing Cost Efficiency with AI Value: Why Disk/Object Storage Now Delivers Greater ROI

It is true that tape remains the lowest-cost per TB for long-term retention. However, cost efficiency must now be evaluated against AI readiness, operational agility, risk reduction, and innovation velocity. The emerging enterprise calculus is no longer about storage cost alone but about data activation cost the ability to rapidly retrieve, prepare, and operationalize data for AI and analytics.

Disk and object storage deliver superior value in four strategic dimensions:

- AI Acceleration and Competitive Advantage

Organizations gain faster experimentation cycles, reduced model training times, enhanced data quality processing, and real-time workflows that tape simply cannot support. - Governance, Lineage, and Reproducibility

Object storage provides granular version control, policy-based lifecycle management, immutability, and metadata-rich indexing, which are essential for regulatory-grade AI. - Operational Reliability and Risk Reduction

Disk/object systems reduce recovery uncertainty, eliminate manual tape handling, simplify DR processes, and ensure higher confidence in long-term data usability. - Enterprise Agility and Innovation Capacity

Data becomes continuously accessible, interoperable across AI pipelines, and available for cross-team, cross-region research and model development.

When viewed through the lens of AI value creation rather than simple storage economics, disk/object storage becomes the superior medium for the majority of enterprise datasets, especially those expected to contribute to model development, analytics, and insight generation.

Tape remains valuable, but only for narrow, low-access, long-term archival use cases.

The Unified AI Data Fabric: Next-Generation Infrastructure for the AI Decade

As organizations modernize toward fourth-generation AI-native data platforms, a unified data fabric, one that spans hot, warm, and cold tiers, becomes foundational. The emerging architectural best practice is clear:

- Hot workloads on flash/disk

- Warm training corpora on object storage

- Cold regulatory archives on tape

This architecture maximizes for Cost efficiency, Sustainability, Data integrity, AI model quality, and Enterprise resilience

Tape does not disappear; it becomes a smaller, more focused component of a broader, more sophisticated data ecosystem.

Disk and object storage emerge as the operational backbone enabling enterprises to convert historical data to high-value AI fuel, from decades-old research to contemporary telemetry.

Closing Thoughts

Executives must recognize that the debate is no longer about storage technologies, it is about unlocking the latent value of historical data to compete in an AI-driven world. The organizations that modernize their data pipelines by shifting from tape-heavy architectures to disk/object-centric platforms will be the ones positioned to accelerate innovation, meet regulatory expectations, and drive durable enterprise advantage.

Tape will remain an essential but minimized tier. Disk and object storage will power the next decade of AI value creation.

Your insights are essential to this evolving conversation.

As enterprises shift toward AI-native architectures, storage modernization is becoming a strategic imperative. How are you preparing your archival and tape environments for AI-driven data demands? I invite you to contribute your perspective and extend this dialogue.