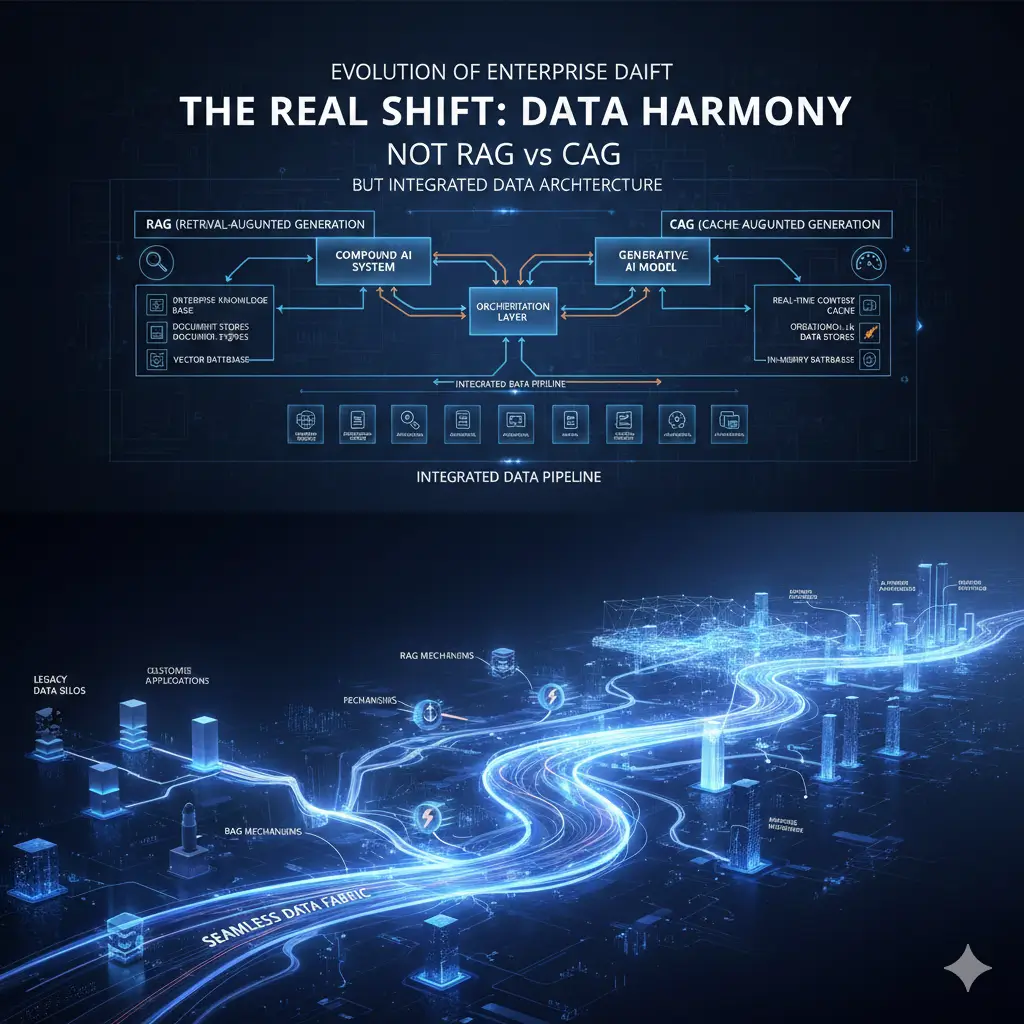

The Real Enterprise Shift Is Not RAG vs CAG

Enterprise AI is failing not because models are not smart enough, but because they cannot remember what they already proved to be true. Retrieval-Augmented Generation (RAG) creates AI amnesia. Cache-Augmented Generation (CAG) creates institutional memory.

That distinction is what determines whether AI can operate in regulated, high-risk environments.

Key Definitions

- Retrieval-Augmented Generation (RAG): An AI pattern where each user query retrieves documents from a vector database and feeds them into a language model for reasoning. Every query is processed from scratch.

- Cache-Augmented Generation (CAG): An AI architecture that stores validated AI executions (queries, tool calls, and results) in persistent semantic memory and reuses them for future queries, eliminating re-computation and randomness.

- Model Context Protocol (MCP): A standardized orchestration layer that captures AI tool calls, parameters, datasets, and outputs so they can be cached, audited, and replayed deterministically.

Why RAG Breaks in Regulated Enterprises

RAG works well for exploration. It fails when correctness, consistency, and auditability matter.

In regulated environments like life sciences, financial services, and government, the same questions get asked repeatedly:

- “Which compounds meet safety thresholds?”

- “What customer transactions trigger AML?”

- “Which records must be retained under SEC 17a-4?”

RAG treats each of these as a brand-new event.

That creates three systemic risks:

| Risk | What Happens |

|---|---|

| Latency | Every query triggers new retrieval and reasoning |

| Inconsistency | Identical questions return different answers |

| Compliance failure | There is no stable audit trail |

An AI that cannot answer the same regulatory question the same way twice is not enterprise-grade.

What Cache-Augmented Generation (CAG) Changes

CAG changes what an AI system is. Instead of recomputing answers, the system reuses validated decisions. When a query is answered correctly once, CAG stores:

- The normalized question

- The data sources used

- The tools invoked

- The verified result

- The timestamp and provenance

The next time that query or a semantically equivalent one appears, the system does not guess. It replays the verified execution.

This transforms AI from:

- probabilistic text generation

- into

- deterministic decision memory

Why This Matters for Life Sciences and Compliance

In life sciences, AI is not about creativity. It is about defensibility.

Consider a researcher asking:

- “Which PRMT5 compounds have IC50 < 10 nM?”

A RAG system might:

- Retrieve different papers

- Rank sources differently

- Hallucinate values

- Produce non-repeatable results

A CAG system returns the same validated dataset every time, with traceability to ChEMBL, BindingDB, or PubChem.

That is what makes AI usable in:

- FDA-regulated workflows

- Clinical trial analytics

- Pharmacovigilance

- Regulatory submissions>

What the Benchmarks Show

Industry research from Microsoft Research and IBM (2025) demonstrated that CAG-style architectures can:

- Deliver up to 40× faster response times

- Reduce inference cost by 30 to 50% at scale

- Eliminate retrieval-ranking errors that plague RAG

Those gains come from reusing computation instead of repeating it.

RAG vs CAG: Enterprise Reality

| Dimension | RAG | CAG | Primary Enterprise Benefit |

|---|---|---|---|

| Consistency | Stochastic | Deterministic | Regulatory safety |

| Latency | Increases with data | Sub-second on reuse | User trust |

| Cost at scale | Grows with queries | Declines with reuse | Long-term ROI |

| Auditability | Weak | Strong | Compliance |

| Governance | External | Built-in | Risk control |

Why Model Context Protocol (MCP) Is the Glue

CAG only works if executions are captured, normalized, and governed. MCP provides that layer by ensuring:

- Every tool call is logged

- Every dataset is recorded

- Every decision is reproducible

This turns AI into something enterprises can:

- Audit

- Secure

- Govern

- Reuse

Where CAG Is Not a Fit

CAG is designed for repeatable, high-value queries.

It is not ideal for:

- One-off exploratory questions

- Highly volatile data with minute-level freshness needs

Most enterprises, however, spend the majority of AI usage on the same critical questions over and over again.

That is where CAG delivers orders-of-magnitude value.

Why This Matters for Solix

CAG requires something most AI stacks lack:

- Metadata

- Lineage

- Governance

- Access control

- Retention policies

- Audit trails

Solix already provides those layers for enterprise data. CAG simply turns them into AI memory. That is why the shift to CAG favors information-architecture platforms, not just model vendors.

The Bottom Line

RAG answers questions.

CAG institutionalizes decisions.

RAG forgets.

CAG remembers.

In regulated enterprise AI, memory is not a feature.

It is the product.

Frequently Asked Questions

Q: Is CAG replacing RAG?

A: No. RAG is still useful for exploration. CAG is what makes repeated, regulated decisions reliable.

Q: What industries benefit most from CAG?

A: Life sciences, financial services, government, legal, insurance, and any industry with compliance, audit, or safety requirements.

Q: How long does CAG implementation take?

A: Most enterprises can deploy a production CAG layer in 8 to 12 weeks by starting with 25 to 50 high-value queries.

Q: Does CAG require retraining models?

A: No. It operates at the orchestration and memory layer, not the model layer.