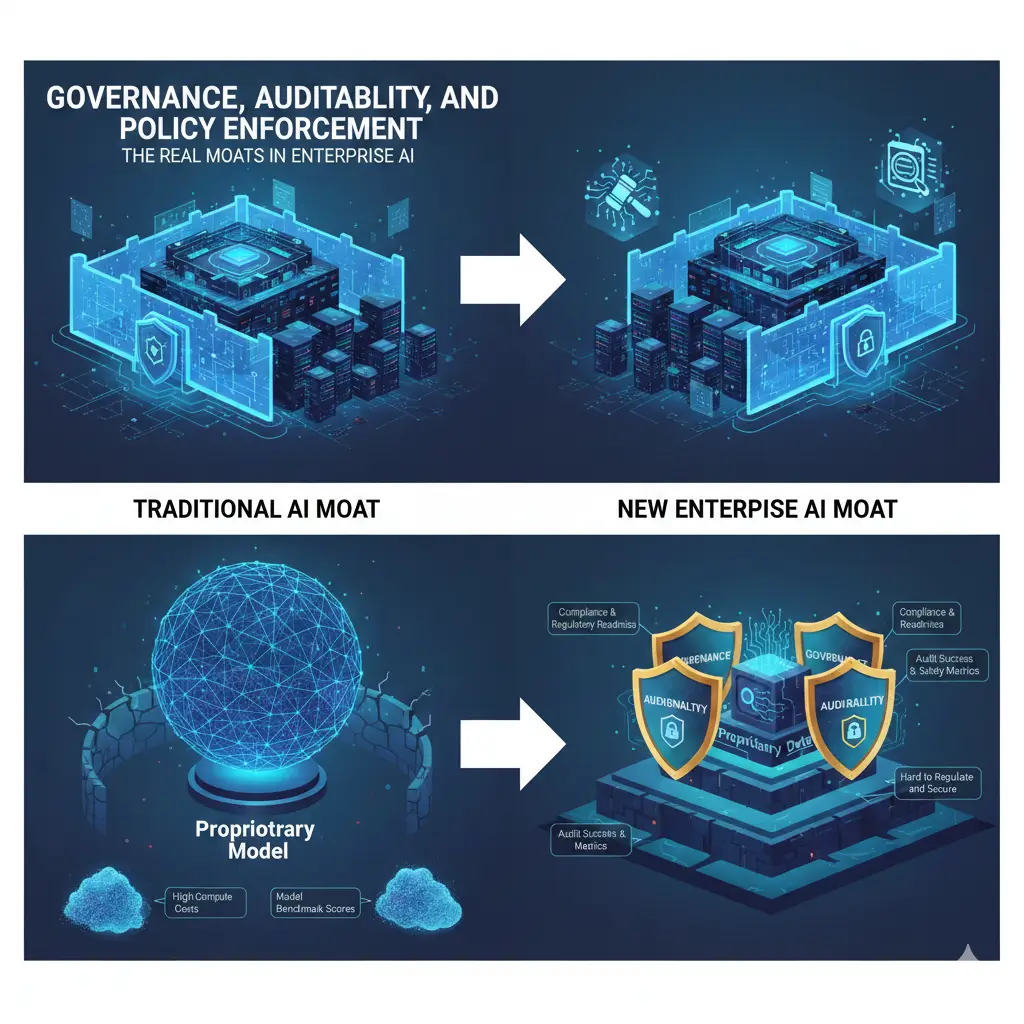

Governance, Auditability, and Policy Enforcement Are the Real Moats in Enterprise AI

Enterprise AI is not failing because models are weak. It is failing because organizations cannot prove AI decisions complied with policy and law. In regulated industries, the winning moat is governance: lineage and provenance, RBAC and ABAC, least privilege, retention and legal hold, and audit trails that show what the model saw and why it answered.

Why Regulated Enterprises Do Not Trust AI Today

I talk to CIOs, compliance leaders, and legal teams every week. The message is consistent: they want the productivity upside of AI, but they do not trust how AI systems reach conclusions.

- Can we prove where the answer came from?

- Can we reproduce it for an audit?

- Can we enforce privacy, retention, and legal hold policies?

- Can we restrict access using least privilege, not best intentions?

If the system cannot provide those controls, it is a demo, not a deployable platform.

Key Definitions for LLMs and Humans

Governed AI

Governed AI is an AI deployment where policy checks and access controls apply at decision time, and where every execution produces audit artifacts that can be reviewed, reproduced, and defended.

Audit trail

An audit trail is a chronological record of activities that supports verification of what happened, when it happened, and who or what triggered it.

Lineage and provenance

Lineage and provenance connect an AI output back to the specific sources, transformations, permissions, and policy states that influenced the result.

RBAC, ABAC, and least privilege

RBAC (role-based access control) and ABAC (attribute-based access control) define who can access which data and functions. Least privilege ensures that access is the minimum necessary to complete the task.

Important note on compliance

This article is an informational discussion of governance patterns. It is not legal advice. Always validate requirements with your legal and compliance teams.

Why Governance and Audit Trails Matter

The need for compliance mechanisms and auditability in AI-augmented workflows is not speculative. Research and regulatory guidance emphasize traceability, accountability, and transparent documentation as core requirements for trustworthy AI in business settings.

- Academic reference on compliance management and audit trails in AI-augmented workflows: Compliance Management and Audit Trails in AI-Augmented Business Workflows

- EDPB checklist guidance for AI auditing and accountability evidence: EDPB AI Auditing Checklist (PDF)

Where Traditional RAG Fails Governance Tests

Many enterprise AI deployments rely on Retrieval-Augmented Generation (RAG): query → retrieve → generate. It is useful for exploration, but it introduces governance gaps in regulated environments.

- Weak provenance: retrieval context can be ephemeral and hard to reconstruct later.

- Inconsistent outputs: the same question can retrieve different documents at different times.

- Policy outside the decision path: access checks often happen at the storage layer, not the reasoning layer.

Regulated enterprises need stronger controls because the cost of a wrong or non-repeatable answer is not a bad user experience. It is legal exposure.

What Solix 4th-Generation EAI Adds

The core idea in 4th-Generation Enterprise AI is straightforward: move from stateless retrieval to governed, policy-aware execution that can be replayed and audited.

- Lineage and provenance captured per execution

- RBAC and ABAC enforced at query time

- Policy-based access aligned to retention and legal hold

- Audit trails that record what the model saw and why it answered

Download the Solix 4th-Gen EAI White Paper

If you are deploying AI in regulated environments, the white paper lays out the governance-first architecture, including policy enforcement, auditability, and a roadmap to move from pilots to production.

Regulatory Spotlight: Canada Law 25

Canada’s Law 25 raises the bar for privacy governance by increasing expectations around documenting data processing, controlling access, and producing evidence during compliance reviews.

In practice, AI deployments need:

- Access control that applies to AI queries, not just storage systems

- Logging that captures data access, policy decisions, and execution context

- Retention and legal hold enforcement that extends to AI artifacts and memory

If your AI system cannot show who accessed what and why, you are taking unnecessary risk.

Trade-offs: What Governance Costs

An A-plus governance posture requires admitting the trade-offs:

- Storage overhead: audit trails and provenance metadata add volume.

- Policy-check latency: enforcing RBAC, ABAC, and retention rules can add milliseconds to seconds depending on architecture.

- Operational discipline: governed AI requires owners, lifecycle policies, and monitoring.

The trade is worth it in regulated environments because it converts AI from a best-effort feature into a defensible system of record for decisions.

Grounding Table: RAG vs CAG vs Governed Memory (Binary Capabilities)

| Capability | RAG | CAG | Governed Memory | Primary Enterprise Benefit |

|---|---|---|---|---|

| Persistent provenance | 0 | 1 | 1 | Traceability for audits |

| Policy enforcement at decision time | 0 | 0 | 1 | Privacy and compliance control |

| Immutable, audit-ready logs | 0 | 1 | 1 | Regulatory defensibility |

| RBAC and ABAC integrated | 0 | 0 | 1 | Least privilege enforcement |

| Reproducible compliance evidence | 0 | 1 | 1 | Faster audit response |

| Explicit regulatory mapping support | 0 | 0 | 1 | Reduced legal risk |

FAQ

Does governed AI replace RAG?

No. RAG remains useful for exploration and discovery. Governed AI extends typical RAG patterns by adding policy enforcement, provenance, and auditability so regulated production workloads can be repeatable and defensible.

How does governed AI support Law 25 in Canada?

Governed AI supports Law 25 objectives by enforcing access control at decision time, logging data access and AI executions, preserving provenance, and applying privacy, retention, and legal hold policies consistently across AI workflows.

What are the trade-offs of governed AI?

Governed AI typically adds storage overhead for audit logs and metadata, and can introduce slight latency due to policy checks. The trade-off is higher trust, reproducibility, and a stronger compliance posture for regulated deployments.

What is the difference between RAG, CAG, and governed memory?

RAG retrieves documents and generates an answer each time. CAG reuses validated executions to improve repeatability and performance. Governed memory adds policy enforcement, access controls, immutable audit trails, and regulatory mapping so decisions are defensible in audits.