Why AI Agents Fail in the Enterprise and How to Build Them So They Don’t

AI agents are entering the enterprise faster than governance frameworks can keep up. What works in a demo or pilot often fails quietly in production, not because the agent is unintelligent, but because the surrounding architecture is incomplete.

The uncomfortable truth most organizations discover too late is this:

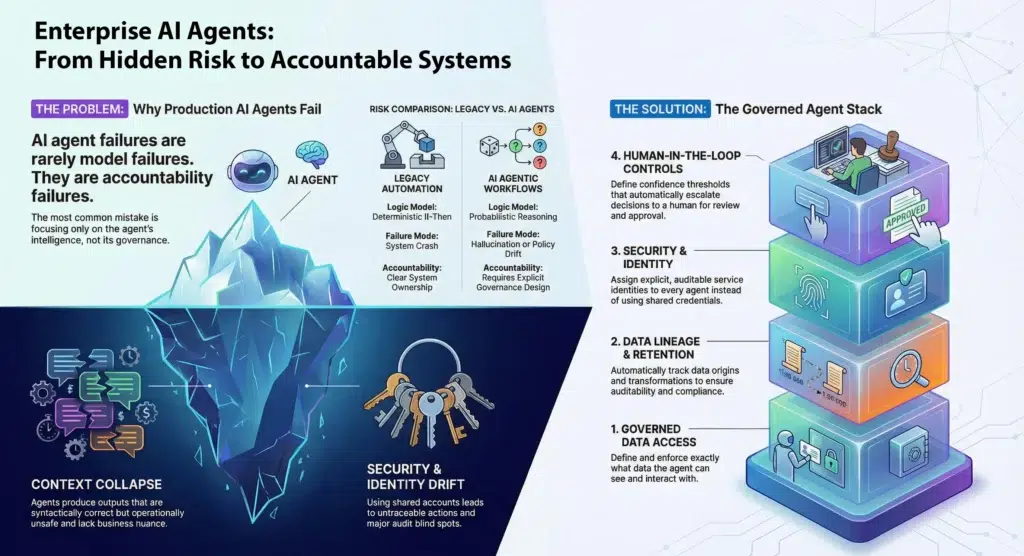

AI agent failures are rarely model failures. They are accountability failures.

The Mental Model Most Enterprises Get Wrong

One of the biggest reasons AI agent initiatives stall is that teams struggle to visualize where the “agent” ends and where enterprise responsibility begins. Vendors tend to frame agents as autonomous intelligence layers. Enterprises must treat them as governed actors operating inside controlled systems.

The correct mental model is not an agent roaming freely across systems, but an agent operating within a layered governance stack.

The Governed Agent Stack

At the center sits the AI agent. Surrounding it are enterprise control layers that are not optional in production:

- Governed Data Access defining exactly what the agent can see

- Data Lineage and Retention preserving traceability and regulatory alignment

- Security and Identity establishing explicit, auditable service identities

- Human-in-the-Loop Controls defining escalation, review, and override points

When these layers are missing or loosely enforced, AI agents may appear productive while quietly increasing operational and compliance risk.

How AI Agents Actually Fail in Production

Context Collapse

AI agents reason fluently, but they do not inherently understand organizational nuance, regulatory intent, or policy boundaries. Without persistent business context, agents produce outputs that are syntactically correct and operationally unsafe.

The Agent Trap: Don’t mistake a high-performing demo for a production-ready system. A demo proves the model is smart. Production proves your architecture is resilient.

Data Lineage Blindness

Agents can ingest large volumes of structured and unstructured data, but enterprises remain accountable for where that data originated, how it was transformed, and how long it must be retained.

Failures surface later during audits, investigations, or legal discovery when teams cannot reconstruct how a decision was made or which sources influenced the agent’s reasoning.

Over-Automation Without Escalation

Many organizations grant agents execution authority without defining confidence thresholds or approval gates. Autonomy without structured escalation does not create efficiency. It creates deferred risk.

Security and Identity Drift

Agents often operate using shared service accounts or inherited human credentials. Over time, this leads to privilege expansion, unclear ownership, and audit blind spots that security teams struggle to detect.

Probabilistic Behavior in Deterministic Environments

Regulated workflows expect consistency and explainability. AI agents operate probabilistically. Without controls, identical inputs can produce different outputs, neither of which may be defensible after the fact.

The Agent Accountability Matrix

AI agents fundamentally change an organization’s risk profile. The difference becomes clear when compared to legacy automation.

| Feature | Legacy Automation | AI Agentic Workflows |

|---|---|---|

| Logic Model | Deterministic If-Then | Probabilistic Reasoning |

| Data Access | Static API Keys | Dynamic and Inherited Permissions |

| Failure Mode | System Crash | Hallucination or Policy Drift |

| Audit Path | Log Files | Traceable Reasoning Chains |

| Accountability | Clear System Ownership | Requires Explicit Governance Design |

The Solix Advantage: Preventing Real-World Agent Failures

AI agents are only as trustworthy as the data foundation beneath them. Solix addresses the most common enterprise failure modes directly:

- Preventing Data Lineage Blindness: Solix automatically tracks and tags every data element an agent touches, enabling full reconstruction of decisions long after execution.

- Retention-Aware Access by Design: Agents operate only within policy-aligned data zones, enforcing compliance through architecture rather than manual review.

- Auditable Reasoning Paths: Inputs, outputs, sources, timestamps, and execution context are preserved for governance, audits, and investigations.

- Isolation from Systems of Record: Agents analyze and reason without directly destabilizing transactional platforms.

This allows enterprises to deploy AI agents safely without retrofitting controls after incidents occur.

The 5-Minute AI Agent Stress Test

Ask your engineering or platform team these questions today:

- If an agent violates a policy, can we trace which specific document influenced that reasoning?

- Do our agents have unique service identities, or are they operating through shared or human accounts?

- What is the explicit confidence threshold that forces an agent to escalate to a human?

- Can we reproduce and explain an agent’s decision six months from now?

- Are retention and access controls enforced automatically or left to developer discipline?

If these answers are unclear, the agent may be functioning, but the system is not enterprise-ready.

Final Thought

AI agents are inevitable. Uncontrolled AI agents are optional.

The organizations that succeed will not be those that automate the fastest, but those that design for accountability, resilience, and trust from the start.

That is the difference between experimentation and enterprise AI at scale.