Best Practice for Structured Data ILM=Data Partitioning + Data Archiving + Data Retirement

Data Management No Comments »Customers often ask us whether archiving or partitioning is the best practice for ILM. This question usually is based on a misunderstanding, because both can be used to address performance and storage cost issues. Partitioning and archiving is not an either/or choice. Rather they are complimentary, as evidenced by this from Gartner. So the correct answer to this question is yes, and Solix EDMS supports partitioning, archiving, and retiring data to the cloud for all your applications across your enterprise.

According to Orafaq, database partitioning is an option that database companies sell on top of a database license . Partitioning allows DBAs to split large tables into more manageable "sub-tables", called partitions, to improve database performance, manageability, and availability. It supports scaling of certain applications and provides the ability to leverage tiered storage to take advantage of lower-cost storage options. Whether partitioning is a good data management strategy depends in large part on how the individual application is architected. For instance, d ata warehouse applications are generally good partitioning candidates because date is usually a key dimension and can be used to spread the data out evenly (by years, quarters, months, etc.) This segregation can be used to improve query performance by excluding ‘offline’ partitions that are no longer needed for day-to-day operations. If applications are not architected with partition elimination strategy in their queries it doesn’t significantly improve database performance as partitioned data still resides in the database as against moving complete sets of data out of the database does.

Database archiving, on the other hand, is the process of moving data that is no longer actively used to a separate data storage device such as SATA disk or tape, for long-term retention. This strategy is recommended for older data no longer active in the production environment but important for reference or regulatory compliance. Generally, this is achieved by creating two versions of each table, one for active data, and one for archived data. However, this means that all queries that need to access the archived data must now select a union of the active and archive tables. Database archiving products manage this data movement in an optimized manner while maintaining the integrity of the application.

Oracle, in its white paper “ Using Database Partitioning with Oracle E-Business Suite", for instance, recommends partitioning for improving performance and saving cost and reducing maintenance activities by moving old partitions to read-only table spaces (pg 49). The biggest problem when defining partition strategies for the Oracle E-Business Suite is that the application code has been prebuilt, which limits the choice of keys. Furthermore, changes to the partitioning strategies delivered with the base product are not recommended or supported. Therefore, the introduction of partitioning is complex, requiring both substantial analysis and robust testing of its effect on different components in your workload. This makes archiving of older data more attractive. A lso, by itself database partitioning does not meet long-term archiving and compliance requirements. On the other hand, transaction-based archiving alone may not be able to keep up with the volume demands of certain applications. Together they can provide a highly complementary solution that maintains performance of the production database while saving money.

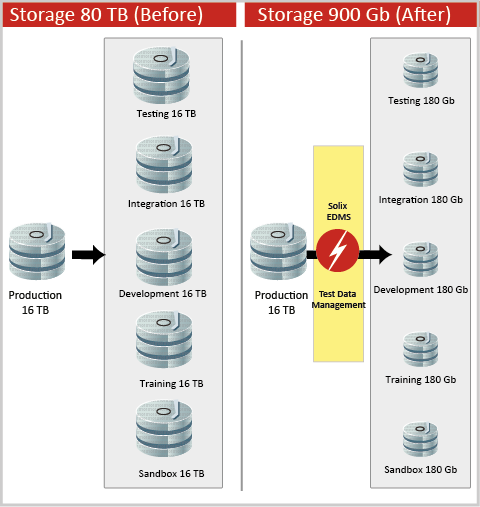

Solix EDMS is designed to help with your partitioning and archiving strategy, as Gartner acknowledges in reports such as “ Solid Vendor Solutions Bolster Database Archiving Market ” by Sheila Childs and Alan Dayley. In that report, Solix is listed along the four important vendors for database archiving. Solix EDMS can be used to apply a consistent set of company ILM policies for data archiving and data retirement across all your data and applications. And it can provide a solution for retaining read-only access to data when retiring obsolescent applications, an important strategy for data center efficiency that is strongly supported in the Gartner report cited above.

On another note, I am happy to announce that we will co-sponsor www.ilmsolutions.net . This is an excellent resource for end-users researching ILM strategy, as it publishes all the information on the market, including industry white papers, analyst reports and customer case studies.