The world population has reached 7 billion and is heading towards 9 billion by 2050. But the story is more complex than that single sentence implies. Population demographics are changing. The internal population of the first world, excluding naturalized citizens and resident aliens, is level or in some cases shrinking, and some countries, such as Germany, are creating incentives for population growth. In contrast, much of Africa and Asia, excluding Japan, are experiencing huge growth. Barring some unforeseen major shift, experts expect India, which presently has the second largest population, to pass China in 2025. Another implication of these trends is the impact on both culture and religion. Worldwide for instance, the populations of certain religions are growing lot faster than other; needless to say, this will have an impact on our geo-politics. Further, the ratio of men to women is also changing worldwide as many third-world cultures prefer male children.

Perhaps the most important implications of this news is that the amount of basic resources – food, water both for personal use and agriculture, and energy in particular – are being strained by the continued population growth. Both China and India, for instance, are tapping deep, antique aquifers that do not refill to support increased agriculture to feed their populations. When those inevitably run out, they will need to find a replacement water source or face a decrease in food production, which has negative implications for supporting their population. Every additional individual will require the production of more food, water, and energy, which will generate increased waste and pollution, further escalating the struggle over natural resources and space.

This is also the year of increasingly extreme weather conditions, widespread drought, enormous forest fires, and especially historic rainfall and flooding. This year has seen unusual flooding throughout the world including the Mississippi Valley in the United States, Queensland in Australia, and currently ongoing in Bangkok, the capital of Thailand. These exacerbating conditions will further impact our productivity and put more strain on the system.

The bottom line: We have to do more with less. We need to further educate our youth on population growth problems and on the need to establish a balance between population and the resources available. The solutions will always lie in empowering youth to make the right decisions. We need to explore sea water purification to increase the water supply for agriculture and personal use, solar and wind power to supplement and eventually replace more polluting forms of power generation. In my recent trip to UC Denver, saw their Solar Powered Light Bulb Invention. This is great, as bulk of third world countries still use fuel for light. This is perfect example for doing more with less; we need more of this to handle the population growth.

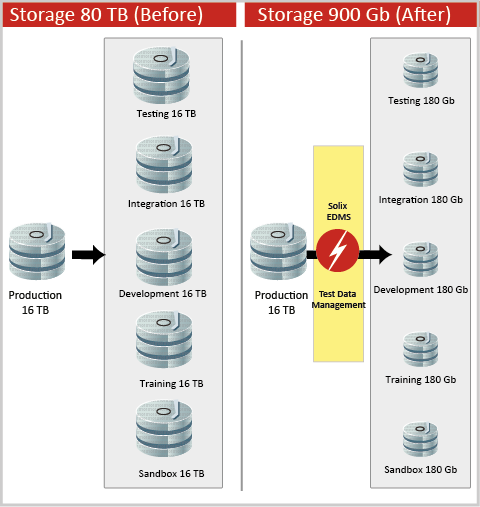

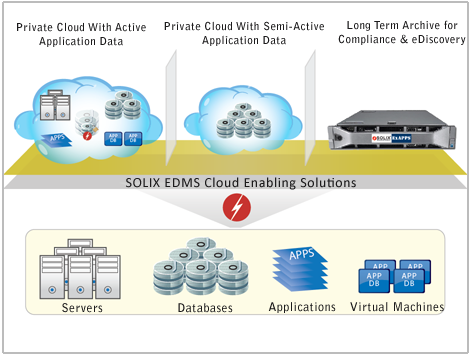

Solix is all about efficiency, do more with less, organizing data, optimizing CPU, Memory, Storage, and therefore Energy usage. This has an impact on the entire infrastructure – network, hardware and software resources and of course on backup/recovery operations.