RHITL: Why the Right Human in the Loop Actually Matters

Blog Commentary

Look, everyone’s talking about “human in the loop” these days. It’s become one of those phrases that gets thrown around in every AI discussion, right up there with “ethical AI” and “guardrails.” But here’s the thing: just putting *a* human in the loop doesn’t cut it anymore. You need the *right* human in the loop. Because I love acronyms, (see this prior blog I wrote: The Missing Piece in AI Governance: Fighting Bias In, Bias Out – SOLIX Blog) I’m calling it RHITL, and it’s not just semantics.

What’s Wrong with Regular HITL?

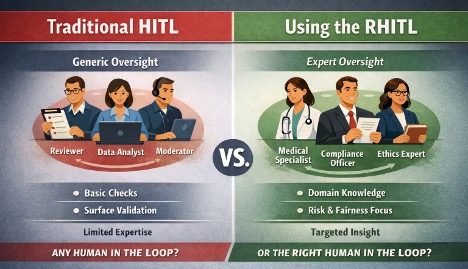

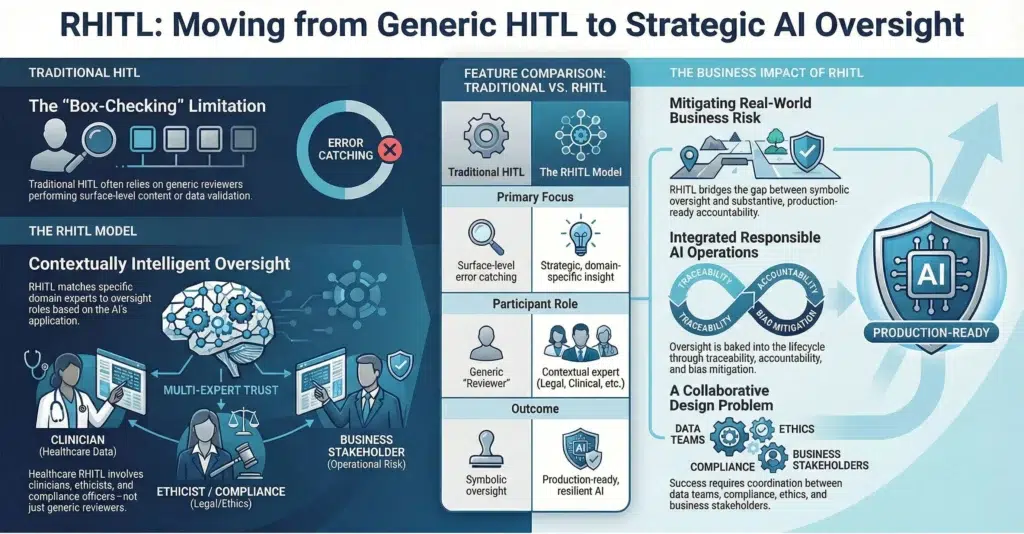

Traditional and basic human-in-the-loop setups tend to be pretty generic. Someone reviews the AI output. Someone checks the model. Someone signs off. Great. But who are these people? What do they actually know about the specific domain? Are they just checking boxes, or do they understand what they’re looking at?

Most HITL implementations focus on catching errors or validating outputs at a surface level. Content moderation, data labeling, basic validation workflows. It’s better than nothing, but it’s not strategic.

RHITL flips that around. Instead of asking “where should we put a human?”, you ask “who specifically needs to be involved here, and why?” It’s about matching expertise to oversight responsibility.

Here’s what I mean: If you’re building a legal AI system, you don’t just need someone to review the outputs—you need an actual legal expert who understands compliance implications. If you’re deploying a financial scoring model, you don’t just need a data scientist monitoring accuracy—you need someone who’s accountable for fairness and can spot bias in real-world terms.

Why This Matters Right Now

We’re at a point where companies are moving AI from pilot projects to production systems that actually matter. And as they do that, the gap between symbolic oversight and substantive oversight becomes a real business risk.

At Solix, we work with organizations building serious data governance programs. What we’ve seen is that companies getting AI platform readiness right are the ones defining controls, roles, and standards upfront—not retrofitting them after something goes wrong.

RHITL isn’t about adding more bureaucracy. It’s about making sure oversight is role-driven and contextually intelligent. It’s baked into what we think of as responsible AI operations: traceability, accountability, bias mitigation across the entire lifecycle.

A Real Example

Let’s say you’re running a healthcare analytics platform that predicts patient outcomes.

Traditional HITL? You assign someone to review the model during validation. Done.

RHITL? You bring in clinicians who understand patient care, compliance officers who know regulatory requirements, and data ethicists who can evaluate fairness and risk. Each person validates the model from their specific angle.

The difference isn’t just better models—it’s models you can actually trust in production. Models that hold up to regulatory scrutiny. Models that patients and providers can have confidence in.

How an AI Governance Framework Connects the “Right Human” to the Task

Implementing RHITL isn’t just a management philosophy; it requires a technical backbone to be effective at scale. This is where a robust AI Governance Framework becomes the essential “matchmaker.”

Without a centralized platform, finding the “right” human is a manual, messy process of emails and spreadsheets. With a governance-first architecture, the matching is automated and enforced through three core capabilities:

- Role-Based Access & Orchestration: The framework defines specific “Expert Personas” (e.g., Legal Counsel, Data Ethicist, or Domain Clinician). When a model hits a critical decision point—such as a high-risk financial prediction—the system automatically routes the output to the specific stakeholder with the authority to sign off, ensuring oversight isn’t just present, but qualified.

- Contextual Metadata Tagging: To get the right human, they need the right context. A governance framework attaches data lineage and “Model Cards” to every output. This allows a compliance officer to see exactly which data sources were used, providing the transparency they need to make an informed “human” judgment.

- Audit-Ready Traceability: RHITL only works if you can prove it happened. The framework creates a permanent record of who reviewed what, when, and why. This transforms “expert insight” into a documented trail of accountability, which is the difference between a successful regulatory audit and a catastrophic fine.

By leveraging an AI Governance framework, organizations move away from “accidental oversight” and toward a strategic, role-driven workflow that ensures the right eyes are on the right data at the right time.

It’s a Design Problem, Not Just a Technical One

Here’s what I think people miss: RHITL is fundamentally about collaboration. It forces you to bring together data teams, compliance, ethics, and business stakeholders—and make each of them the “right human” for their part of the process.

That’s messy sometimes. It requires coordination. But it’s also how you build AI systems that work in the real world, not just in demos.

As companies push toward enterprise-scale AI, the lesson is straightforward: AI doesn’t just need human input. It needs the right human insight at the right time. That’s what makes AI systems responsible, resilient, bias-free and actually ready for production.