Enterprise RAG – How to Ground Enterprise AI in Governed Data

Large Language Models, as impressive as they are, can still make mistakes. The impact of these mistakes often depends on the nature of the input prompt, the criticality of the scenario, and the action that the LLM output drives. In a consumer-grade use case, slips may be tolerable, but in an enterprise setting, the error tolerance is near zero. Knowledge cutoffs and model hallucinations can trigger non-compliance issues, weaken strategic decision-making, and lead to revenue loss.

The solution is to ground LLM responses on the organization’s governed data, supplemented with current, verifiable information, so the model remains within the scope of the enterprise’s truth. Retrieval-augmented Generation (RAG) exactly provides this safeguard, anchoring model outputs in verifiable data and enabling generative AI to be both accurate and fluent within the boundaries of governed enterprise information.

What is Retrieval-Augmented Generation?

RAG combines the LLM with an external retrieval layer. This allows the gen-AI model to search trusted knowledge bases (documents, databases, and APIs) instead of relying solely on initial training data and what the model “remembers.” The most relevant passages are then fed into the prompt, allowing the model to generate an output conditioned on this preset context. This will enable users to achieve a higher level of model accuracy without needing to tune any model weights manually.

Why do Enterprises Need RAG?

RAGs enable LLM outputs to be factually grounded by linking answers to specific trusted sources. These sources are continuously updated, allowing knowledge to be refreshed without retraining the model and enabling faster reflection of real-time data in LLM outputs. Specialized language, verbiage, policies, and procedures are automatically incorporated into prompts, thereby improving the context of the prompts. Additionally, using RAGs allows users to review sources through retrieval logs, making compliance and auditing simpler.

RAG Architecture Explained

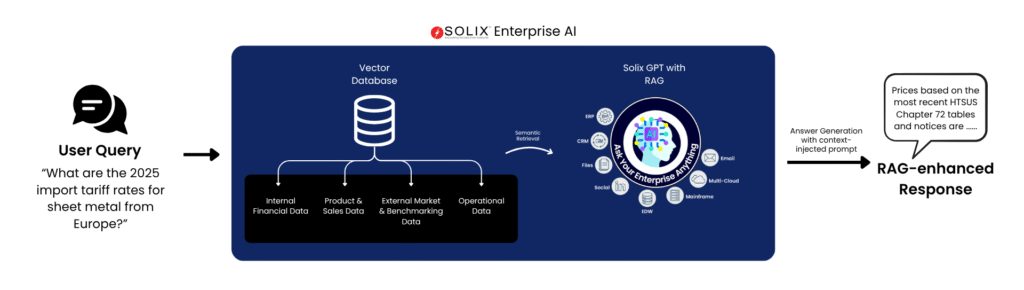

A RAG pipeline has three primary stages: retrieval, augmentation, and generation. All source documents are preprocessed and embedded into a vector database. Each time a query is made, the question is converted into a vector embedding, which is used to perform a semantic search against the vector database. The top-k relevant documents are retrieved, and context is augmented onto the prompt. Finally, this augmented prompt (initial query + context) is fed into the LLM, generating the final output.

RAG-assisted vs Non-RAG-assisted Responses to User Query

A useful way to understand RAG is to understand how an LLM answers a query with and without RAG:

Non-RAG-Assisted Response

When an LLM is asked a question, it solely relies on its internal parameters, which are based on knowledge encoded during the model training. This comes with drawbacks:

- Model Hallucination: When a query is made to an LLM that probes beyond its scope of training, there is a tendency for the model to “fill in the blanks” with an invented, but very plausible-sounding answer.

- Stale Knowledge: A large language model’s worldly knowledge ends at its knowledge cutoff. Queries made after this cutoff may lead to less accurate model responses, limiting the relevance of decisions.

- Poor Domain Adaptation: While LLMs are generally good at many tasks, without fine-tuning, their reliability becomes questionable for use cases in highly specialized and nuanced domains like healthcare, law, or finance.

RAG-Assisted Response

A RAG-assisted response, in contrast, retrieves relevant passages from a trusted, up-to-date knowledge source, which is fed into the LLM before generating the response. This helps in several ways:

- Outputs Grounded in Facts: LLMs via RAG systems are grounded in facts backed by the enterprises’ knowledge base. This provides context-specific classification to user queries, which reduces AI-hallucination.

- Current Knowledge: By regularly refreshing the enterprises’ knowledge bases, LLMs can respond to user queries while ensuring the relevance and currency of the model outputs.

- Domain-adaptable AI: Enterprise AI implementations can be trained on domain-specific knowledge bases that RAG systems can leverage to ensure nuance, without needing to fine-tune to each use case.

Example

User Query: “What are the 2025 import tariff rates for sheet metal from Europe?”

Without RAG (plain LLM): Relies on training data with a cutoff. It may hedge or quote outdated rates.

With RAG (illustrative answer): “Based on the most recent HTSUS Chapter 72 tables and notices published by the U.S. trade authorities (updated Mar 2025), hot-rolled flat-rolled steel under HTSUS 7208.xx from EU member states is subject to an MFN base rate of X%, plus any applicable Section 232 measures. Exemptions apply for quotas under [program Y].

Sources: HTSUS §72 tables (rev. Mar 2025), Federal notices on Section 232 (Feb–Mar 2025).

Retrieved on: Sep 25, 2025. Grounding: High (r=0.84).”

(Numbers above are placeholders, your production system would cite the exact table rows and notices.)

Implementation Blueprint (What to Build First)

- Scope & gold set: Pick one domain (e.g., tariffs, policy, product docs). Author 25–50 real user questions with reference answers and sources.

- Ingest pipeline: Parsers for top file types, metadata policy, de-duplication, PII classification, and legal-hold flags.

- Dual index: Dense vector store and lexical search, metadata storage (date, system, jurisdiction, ACLs).

- Retrieval stack: Multi-query expansion → hybrid search → cross-encoder re-rank → context shaping (dedupe, compress, order).

- Prompting & generation: Enforce grounded answers; require citations for declaratives, allow no-answer when confidence is low, measure hallucinations and relevance of answers for transparency

- Observability: Log retrieval sets/prompts/answers; run nightly offline eval; ship dashboards for groundedness and citation precision.

- Access & Governance: Enforce the principle of least privilege, utilizing RBAC/ABAC at both ingestion and query, so that each user only sees the relevant information they are allowed to access. Pair this with comprehensive audit trails, alongside retention, and legal-hold workflows.

- Rollout: Start behind a feature flag for one team; iterate on failure cases; expand sources and domains once metrics stabilize.

How Solix Can Help Your RAG Implementation?

Solix enables enterprises to unify, govern, secure, and activate their data so it’s audit-ready, secure, private, and available right out of the box. Our solutions combine compliant archiving, classification, cataloging, data governance policy enforcement, document and file management, and data unification, transforming fragmented content into governed, reusable assets that support analytics and AI.

Solix EAI takes this foundation further. It’s a model-agnostic platform designed to stage governed data and create a production-level RAG system that operates across your Gen-AI environments. With hybrid retrieval, re-ranking, smart chunking, policy-aware access controls (RBAC/ABAC), masking/legal holds, and audit-grade lineage, Solix EAI allows you to stage once and deploy RAG everywhere, providing accurate, citation-backed answers at enterprise scale.