Inside Data Masking: Techniques, Challenges, and Best Practices

Data breaches cost businesses an average of $4.45 million per incident in 2023, and 83% of organizations admitted to multiple breaches in the past year, securing sensitive data is no longer optional—it’s existential. Data masking has emerged as a critical defense, but its effectiveness hinges on how well you implement it. Let’s dive into the techniques, challenges, and best practices that separate successful strategies from costly missteps.

Techniques Used in Data Masking

Data masking isn’t one-size-fits-all. Different scenarios demand different techniques based on how data is used and how secure it must remain. Each masking method has unique strengths and trade-offs. Choosing the right one depends on your use case, compliance needs, and data utility requirements. Here are the most popular methods:

- Substitution

- What: Replace real data with fake but realistic values.

- Value: Maintains data format and realism for testing.

- Risk: Requires a curated substitution set to avoid duplicates or unrealistic patterns.

- Example: Swapping real customer names with names from a preset list.

- Shuffling

- What: Randomly reorder data within the same dataset.

- Value: Preserves statistical distributions for analytics.

- Risk: Breaks column relationships (e.g., mismatched names and diagnoses).

- Example: Randomly reordering employee IDs within an HR database.

- Nulling Out

- What: Replace sensitive fields with NULL or placeholders.

- Value: Simple to implement.

- Risk: Reduces data utility for testing or development.

- Example: Hiding credit card numbers when they’re not needed for development.

- Encryption

- What: Sensitive data is encrypted and can only be decrypted with the correct key.

- Value: Strong security for data at rest or in transit.

- Risk: Overhead from key management; not ideal for non-production use.

- Example: Using a cryptographic method to store masked health records.

- Format-Preserving Encryption

- What: FPE encrypts data while retaining its original format.

- Value: Seamless integration with use cases that require specific data formats.

- Risk: Complexity in managing encryption keys; limited to structured data.

- Example: A 16-digit credit card number remains 16 digits.

- Tokenization

- What: Replace sensitive data with unique identification symbols (non-sensitive tokens).

- Value: Tokens are useless to hackers; ideal for PCI compliance.

- Risk: Requires a secure token vault and mapping logic.

- Example: Substituting a bank account number with a token during financial transactions.

- Redaction

- What: Permanently remove or redact sensitive data.

- Value: Irreversible and compliant with regulations like GDPR.

- Risk: Destroys data utility for downstream processes.

- Example: blacking out the last four digits of an SSN in a report.

- Pseudonymization

- What: Replace identifiers with pseudonyms, with a mapping table stored securely.

- Value: Reversible for authorized users; GDPR-friendly.

- Risk: Requires secure storage of pseudonym keys to prevent re-identification.

- Example: Assigning code names instead of real names (e.g., substituting “User A” for “John Doe”) for clinical research subjects.

- Anonymization

- What: Irreversibly alter data so it cannot be linked to individuals.

- Value: Eliminates re-identification risks; ideal for public datasets.

- Risk: Limits granularity for analytics and machine learning.

- Example: Aggregating age ranges instead of exact birthdates.

Challenges of Data Masking

While the concept of data masking sounds straightforward, executing it successfully across an enterprise presents several hurdles. One of the primary challenges is maintaining referential integrity—masked data must still preserve relationships across databases to avoid breaking applications. Performance issues can also arise, as large-scale masking operations may slow down database performance, especially with growing volumes of data. Managing evolving masking rules for different data types increases the risk of errors. On top of that, compliance regulations like GDPR, HIPAA, and CCPA require not just masking but proof of proper data protection. Without a careful, consistent approach, masked data can become either unusable or non-compliant.

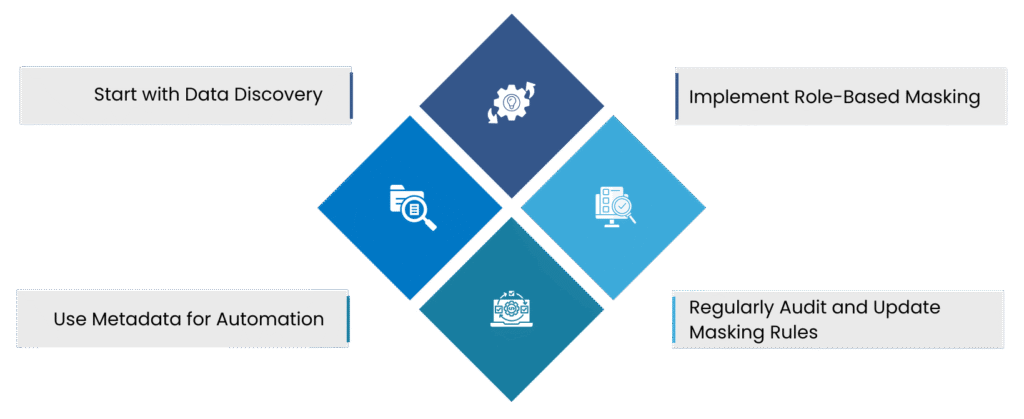

Best Practices for Data Masking

The good news? Companies that master these best practices position themselves ahead of the curve:

- Start with Data Discovery: Identify where sensitive data resides (e.g., PII in CRM systems, payment data in transactional databases). Tools like Solix Data Masking automate discovery using AI-driven classification.

- Use Metadata for Automation: Tag data with metadata (e.g., “PII,” “HIPAA-protected”) to apply masking rules programmatically, reducing human error.

- Implement Role-Based Masking: Ensure only authorized roles see unmasked data (e.g., developers get fake data, auditors see partial masking).

- Regularly Audit and Update Masking Rules: Data landscapes evolve. Periodically audit your masking policies to adapt to schema changes, new data types, and emerging security threats.

Final Thought

Data masking isn’t a “set and forget” solution. It demands continuous refinement, especially as regulations tighten and datasets grow. By combining the right techniques with robust practices, you can turn masked data into a strategic asset—one that fuels innovation without inviting risk.

Read Part 3: “How to Choose the Right Data Masking Tool (and What’s Next for the Industry)”

Get a checklist for evaluating data masking solutions—and discover how AI, automation, and cloud-native architectures are shaping the future of data protection.

Choosing the right data masking tool isn’t just about features—it’s about future-proofing your organization against breaches, protecting brand trust, and accelerating safe innovation. Enter Solix Data Masking, a robust, enterprise-grade solution designed to secure sensitive data across testing, development, AI/ML, and analytics use cases. Mitigate risk, empower innovation, and stay compliant—all with Solix.