AI Governance and Business-Specific Contextual Accuracy

Key Takeaways

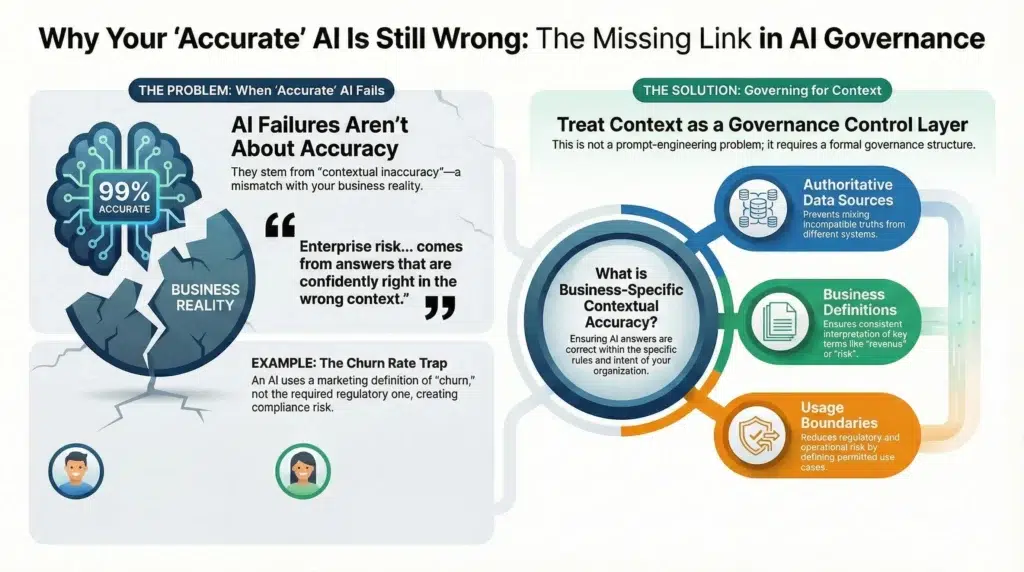

- AI governance failures rarely come from model accuracy alone. They come from contextual inaccuracy.

- An answer can be technically correct but wrong for your business, industry, or regulatory environment.

- Business-specific contextual accuracy is the missing control layer in most AI governance programs.

- Enterprises must govern data, context, and usage, not just models.

Why “accuracy” is not enough

Most AI discussions focus on accuracy as a statistical measure. Precision, recall, and benchmark scores dominate technical reviews. In enterprise environments, that definition is incomplete.

A model can be statistically accurate and still deliver outcomes that are operationally wrong, non-compliant, or misleading. This happens when AI lacks the business-specific context required to interpret data correctly.

Enterprise risk does not come from wrong answers. It comes from answers that are confidently right in the wrong context.

What is business-specific contextual accuracy?

Business-specific contextual accuracy means that an AI system produces outputs that are correct within the rules, definitions, constraints, and intent of a specific organization. It answers the question:

“Is this answer correct for how our business actually operates?”

Context includes:

- Internal definitions and metrics

- Regulatory obligations and jurisdictional rules

- Contractual and policy constraints

- Data freshness and authoritative sources

- Role-based permissions and usage boundaries

Where contextual accuracy breaks down

1) Generic training versus enterprise reality

Foundation models are trained on broad public and licensed datasets. They do not understand how your company defines revenue, risk, customer, or compliance unless you explicitly provide that context.

2) Conflicting internal truths

Large organizations often have multiple systems that claim to be “the source of truth”. Without governance, AI may blend incompatible definitions and present them as a single answer.

3) Regulatory blind spots

An AI answer that ignores jurisdictional or industry-specific rules can create immediate compliance exposure, even if the underlying data and reasoning are correct in a general sense.

Why this is an AI governance problem, not a prompt problem

Many teams attempt to solve contextual accuracy with better prompts or longer instructions. This approach does not scale and does not survive audits.

Contextual accuracy is a governance problem because it depends on:

- Which data is authorized for use

- Which definitions are approved

- Which policies apply to which use cases

- Which users are allowed to see or act on results

- How decisions are logged, reviewed, and corrected

A concrete example

A financial services firm asks an AI assistant, “What is our customer churn rate this quarter?” The model responds with a clean, well-reasoned number.

The problem is not the math. The problem is that churn has a regulatory definition tied to account closures, not marketing disengagement. The AI used the wrong internal dataset.

The answer is statistically accurate and operationally dangerous.

Contextual accuracy as a control layer

Mature AI governance programs treat contextual accuracy as a first-class control, alongside security and privacy. That control layer typically includes:

| Control area | What is governed | Why it matters |

|---|---|---|

| Authoritative data sources | Which datasets can answer which business questions | Prevents mixing incompatible truths |

| Business definitions | Approved metrics, terms, and calculations | Ensures consistent interpretation |

| Usage boundaries | Permitted use cases and decision scopes | Reduces regulatory and operational risk |

| Human-in-the-loop review | When outputs require approval | Adds accountability and oversight |

| Audit and feedback | Logging, review, and correction mechanisms | Enables continuous improvement |

How this aligns with modern AI governance standards

Emerging AI governance frameworks emphasize that AI risks are socio-technical, not purely algorithmic. This includes risks related to misunderstanding context, intent, and domain-specific constraints.

ISO/IEC 42001, for example, frames AI governance as a management system. That framing naturally supports contextual accuracy because it requires organizations to define scope, responsibilities, controls, and continuous improvement across AI use cases.

Practical steps to improve contextual accuracy

- Inventory AI use cases and map each to approved data sources and definitions.

- Define business glossaries that are enforceable, not optional documentation.

- Bind context to access so users only receive answers appropriate to their role.

- Log inputs and outputs for review and correction.

- Measure contextual errors, not just model accuracy.

- Feed corrections back into governance and training loops.

Where Solix fits

Business-specific contextual accuracy depends on governed data foundations. If context lives in scattered systems, spreadsheets, and tribal knowledge, AI will fail regardless of model quality.

Solix helps enterprises centralize and govern the data, policies, and retention rules that define business context. This creates a trustworthy substrate for AI systems to deliver answers that are not just correct, but correct for the business and defensible under scrutiny.

Building an AI governance program?

If you are designing AI governance and struggling with inconsistent or risky AI outputs, Solix can share a practical framework for embedding business-specific context into your AI architecture.

Request a demo or learn more.

FAQ

Is contextual accuracy the same as prompt engineering?

No. Prompt engineering can help guide responses, but contextual accuracy requires governed data, approved definitions, and enforceable controls.

Can RAG alone solve contextual accuracy?

Retrieval helps, but without governance over which sources are authoritative and how they are used, RAG can amplify conflicting or outdated context.

How do regulators view contextual accuracy?

Regulators focus on outcomes, accountability, and evidence. If an AI-driven decision cannot be explained in business and regulatory terms, contextual accuracy will be questioned.