What is Data Nulling?

Data Nulling is a data masking technique that replaces sensitive information with a placeholder value, typically a null character or a generic representation like “XYZ.” This removes the original data, leaving behind a structurally similar but meaningless version. This means the data is completely removed from the dataset, making it impossible for unauthorized users to access it.

However, it is imperative to acknowledge that null values may induce unpredictable behavior in applications, making them suboptimal for comprehensive application testing. Executing analytical or reporting functions on masked data is inherently challenging due to the intrinsic limitations of null values. This is because null values can skew the results of analytical queries.

How Data Nulling Works?

The basic methodology behind Data Nulling involves systematically and precisely replacing sensitive data fields with null values, rendering the original information inaccessible while preserving the structural integrity of the dataset.

- Identification of Sensitive Data: The process commences with identifying sensitive data elements within a dataset. These could include personally identifiable information (PII), financial data, or confidential information requiring protection.

- Mapping and Cataloging: Once identified, the sensitive data elements are meticulously mapped and cataloged. This step ensures a comprehensive understanding of the dataset’s composition and aids in the precise application of nulling.

- Substitution with Null Values: Nulling is executed by systematically replacing the identified sensitive data fields with void values. This substitution ensures the original data is irretrievable while maintaining the overall structure and relationships within the dataset.

Benefits of Data Nulling

Data Nulling offers benefits that align with the multifaceted landscape of data security, compliance, and confidentiality. Here are a few key advantages associated with the data masking technique.

- Mitigate Data Breach: Nulling is a formidable defense mechanism against internal and external threats by nullifying sensitive data fields. It diminishes breach risks and secures non-production and production settings from unauthorized access to critical data.

- Data Privacy Compliance: It ensures compliance with data protection regulations, such as GDPR, PCI DSS, HIPAA, LGPD, PIPL, etc. Irreversibly substituting sensitive data with null values aligns with anonymization principles, bolstering compliance frameworks and reducing legal risks.

- Utility and Security: It balances data utility and security by making sensitive data unrecoverable. This enables realistic testing and analytical studies and provides organizations with data usability and confidentiality without compromising.

- Dynamic and Static Masking: It seamlessly integrates dynamic and static data masking. Dynamically, it substitutes null values in runtime for enhanced real-time security, and in static masking, it ensures uniformity between non-production and production environments.

Limitations

While Data Nulling is a valuable data masking technique with notable advantages, it is essential to recognize its limitations and potential drawbacks. Here are some of the disadvantages associated with the masking technique.

- Loss of Data Context: One significant drawback of nulling is the potential loss of data context. The presence of null values may skew analytical results, affecting the accuracy of queries and potentially leading to misinterpretation of data trends.

- Impact on Testing Application: Introducing null values may disrupt application behavior remarkably if not handled gracefully. This can lead to unexpected errors, hindering comprehensive testing, especially where realistic scenarios are vital.

- Incompatible for Complex Relationships: In certain instances, the system may face intricate data relationship constraints or limitations. Replacing sensitive fields with null values may disrupt dependencies and hinder masking effectiveness and utility in such scenarios.

Use Cases

Data Nulling finds application in various scenarios where protecting sensitive information is paramount. Apart from the limitation mentioned above, here are some notable use cases illustrating the practical implementation of nulling:

- Testing Environments: It is widely used in development and testing contexts to thwart unauthorized access to datasets by replacing sensitive data with null values, allowing the organization to create realistic, secure testing environments.

- Analytical Research: It nullifies sensitive data, enabling researchers to perform analyses without risking the exposure of sensitive data in healthcare, finance, and research. Sometimes, null values may skew the analytical result, leading to unexpected errors.

- Data Sharing: It facilitates secure data sharing by replacing sensitive fields with null values. This ensures that external collaborators can work with the dataset without being exposed to confidential information, fostering collaboration without compromising data security.

- Development Environments: It preserves data relationships while masking sensitive information, providing developers with realistic datasets for testing purposes. This ensures that applications undergo thorough testing without compromising data integrity.

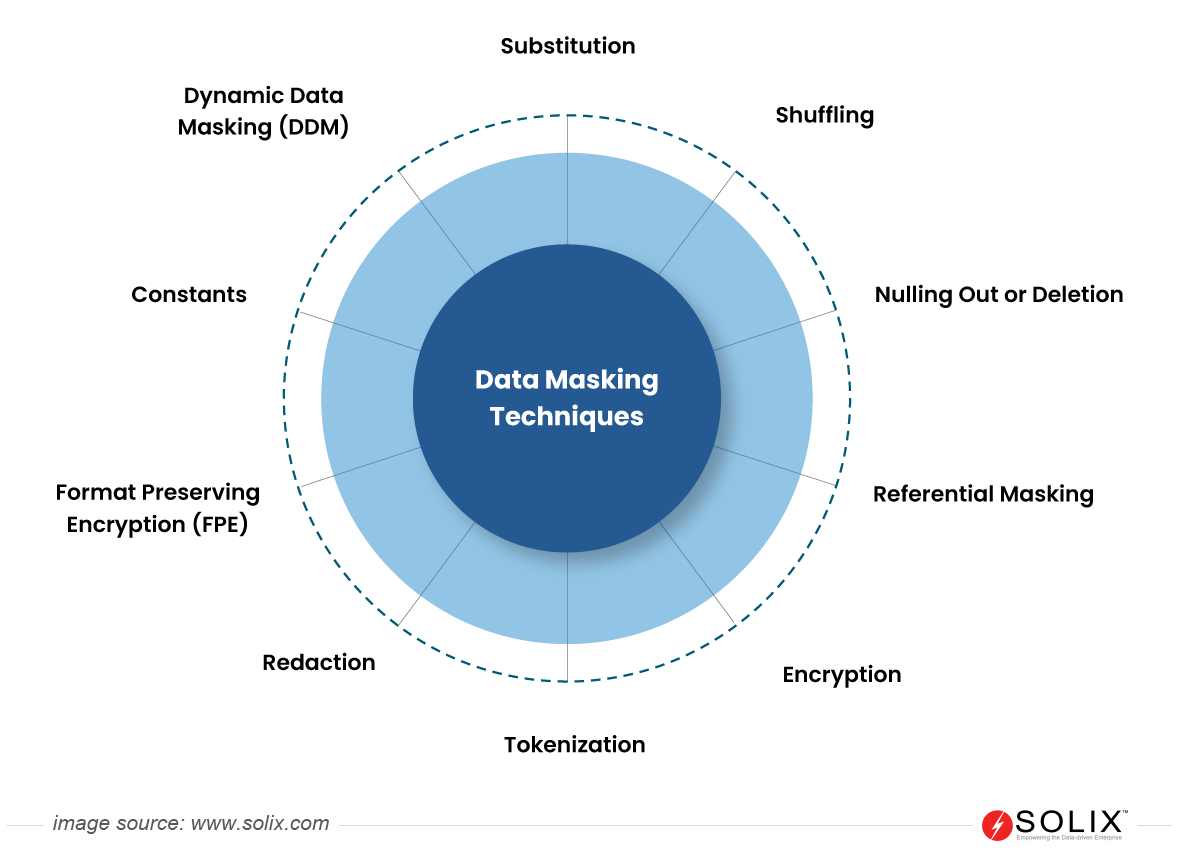

In conclusion, Data Nulling is a sophisticated technique within the broader spectrum of data masking in the evolving data security and privacy landscape. Its ability to render sensitive information irretrievable while maintaining the utility of datasets positions it as a valuable asset in non-production and analytical environments. By implementing masking techniques like Data Nulling, businesses can enhance their data management practices, mitigate risks, and uphold the integrity of their systems in today’s dynamic digital landscape.

FAQ

Can Data Nulling be applied to various compliance regulations?

You can tailor Data Nulling to comply with regulatory requirements such as GDPR, PCI DSS, HIPAA, LGPD, and PIPL. It ensures you can conduct compliance testing without compromising actual sensitive data.

Are there any limitations or drawbacks to using Data Nulling?

One limitation of Data Nulling is that it may not fully replicate the complexity of real-world data scenarios. Additionally, extensive nulling could impact the performance of certain applications or systems.